My goal was to convert a recording of someone singing or humming to a MIDI file. For those unfamiliar, a MIDI file is basically digital sheet music. Therefore, the software needs to detect the pitch and length of the notes sung. I started this project in 2017 in my second year of higher technical college (HTL). It was a very ambitious goal. It took me years of failing, giving up, and trying again a few months later to arrive at a functioning algorithm. The only programming language I knew back then was C. That's why this project is written in C.

My first attempt used the waveform directly. It would shift the wave in time and compare it to the original to see where it lines up best. This way it would find out the length of a period and use this information to calculate the frequency or pitch. This method caused a few problems. The wave would line up just as well or sometimes even better at twice the period or three times and so on. Also, there was no way to detect the transition from one note to the next, except for the overall amplitude of the wave, which can be influenced by background noise, etc.

Then I tried converting it into frequency-domain using an FFT (fast Fourier transform) library. The problem with this method was, the frequencies you get with FFT are on a linear scale. But the human perception and therefore music works with a logarithmic scale. A Fourier transform can be done for any frequency, only FFT requires the use of a linear scale because it does all frequencies at once.

Knowing that I wrote my own Fourier transform using a logarithmic scale.

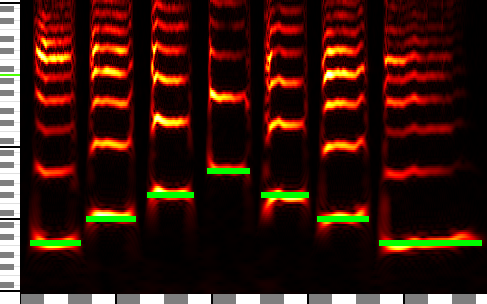

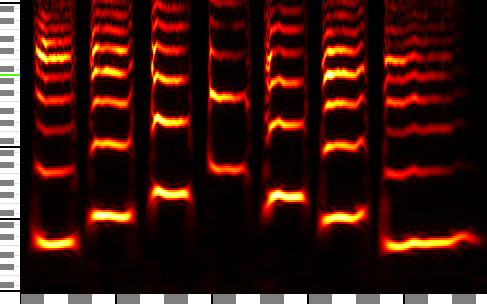

After a bit of refinement, I arrived here:

To produce this image, I wrote a simple visualization tool in C, that I won't go into further detail about.

A human can relatively easily see what the notes are. The "shadows" above the notes are overtones. Every instrument and every voice has overtones. Their amplitudes and phase shifts are what differentiates one instrument from another. Of course, I only care about the fundamental frequencies. Just taking the one with the highest amplitude wouldn't work, because sometimes an overtone can have a higher amplitude than the fundamental. The first overtone has twice the fundamental frequency and is therefore exactly one octave higher.